This section overlaps with objectives 1.1 (Advanced storage management) and 1.2 (Storage capacity) but covers the multipathing functionality in more detail.

Knowledge

- Explain the Pluggable Storage Architecture (PSA) layout

Skills and Abilities

- Install and Configure PSA plug?ins

- Understand different multipathing policy functionalities

- Perform command line configuration of multipathing options

- Change a multipath policy

- Configure Software iSCSI port binding

Tools & learning resources

- Product Documentation

- vSphere Client

- vSphere CLI

- esxcli

- VMware KB articles

Understanding the PSA layout

The PSA layout is well documented here, here. The PSA architecture is for block level protocols (FC and iSCSI) – it isn’t used for NFS.

Terminology;

- MPP = one or more SATP + one or more PSP

- NMP = native multipathing plugin

- SATP = traffic cop

- PSP = driver

There are four possible pathing policies;

- MRU = Most Recently Used. Typically used with active/passive (low end) arrays.

- Fixed = The path is fixed, with a ‘preferred path’. On failover the alternative paths are used, but when the original path is restored it again becomes the active path.

- Fixed_AP = new to vSphere 4.1. This enhances the ‘Fixed’ pathing policy to make it applicable to active/passive arrays and ALUA capable arrays. If no user preferred path is set it will use its knowledge of optimised paths to set preferred paths.

- RR = Round Robin

One way to think of ALUA is as a form of ‘auto negotiate’. The array communicates with the ESX host and lets it know the available path to use for each LUN, and in particular which is optimal. ALUA tends to be offered on midrange arrays which are typically asymmetric active/active rather than symmetric active/active (which tend to be even more expensive). Determining whether an array is ‘true’ active/active is not as simple as you might think! Read Frank Denneman’s excellent blogpost on the subject. Our Netapp 3000 series arrays are asymmetric active/active rather than ‘true’ active/active.

Install and configure a PSA plugin

There are three possible scenarios where you need to install/configure a PSA plugin;

- Third party MPP – add/configure a vendor supplied plugin

- SATPs – configure the claim rules

- PSPs – set the default PSP for a SATP

Installing/configuring a new MPP

Installing a third party MPP (to supplement the NMP) is either done through the command line (by using esxupdate or vihostupdate with a vendor supplied bundle) or Update Manager, depending on the vendor’s support. Powerpath by EMC is one of the most well-known third party MPPs – instructions for installing it can be found in VMwareKB1018740 or in this whitepaper on RTFM.

After installing the new MPP you may need to configure claim rules which determine which MPP is used – the default NMP (including MASK_PATH) or your newly installed third party MPP.

To see which MPPs are currently used;

esxcli corestorage claimrule list

To change the owner of any given LUN (for example);

esxcli corestorage claimrule add --rule 110 --type location -A vmhba33 -C 0 -T 0 -L 4 -P &lt;MPPname&gt; <br />esxcli corestorage claimrule load <br />esxcli corestorage claiming unclaim --type location -A vmhba33 -C 0 -T 0 -L 4 <br />esxcli corestorage claimrule run

To check the new settings;

esxcli corestorage claimrule list

Configuring an SATP

You can configure claim rules to determine which SATP claims which paths. This allows you to configure a new storage array from MyVendor (for example) so that any devices belonging to that storage array are automatically handled by a SATP of your choice.

To see a list of available SATPs and the default PSP for each;

esxcli nmp satp list (OR esxcfg-mpath -G to just see the SATPs)

To set criteria which determine how a SATP claims paths (example);

esxcli nmp satp listrules

NOTE: We use Netapp 3000 series arrays and they use the generic VMW_SATP_ALUA SATP. This has MRU as its default PSP even though RR is the ‘best practice’ recommendation for this storage array. If we change the default PSP however and then introduce a different array to our environment (which also uses the same SATP) it could get an unsuitable policy so the solution is to add a claimrule specific to our Netapp model;

esxcli nmp satp addrule --vendor=”Netapp” --model=”3240AE” --satp=VMW_SATP_ALUA

NOTE: This is different to the claimrules at the MPP level (esxcli corestorage claimrule)

Configuring the default PSP for a SATP

To change the default PSP;

esxcli nmp satp setdefaultpsp --satp VMW_SATP_ALUA --psp VMW_RR

Administering path policy (GUI)

Viewing the multipathing properties (GUI)

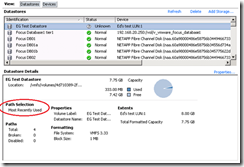

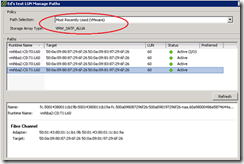

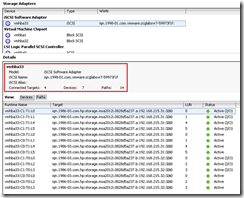

From the VI client go to Configuration -> Storage, select the Datastore and then Properties -> Manage Paths. This will show the SATP and PSP in use;

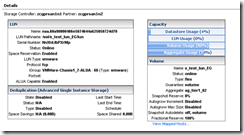

Often you’ll have access to vendor tools as well as the VI client but this http://premier-pharmacy.com/product-category/migraine/ doesn’t always help – the Netapp plugin for example doesn’t show the multipathing policy for a LUN although it does show the various ‘layers’ of storage and whether ALUA is enabled or not;

Changing the path policy (GUI)

- Go to ‘Manage Paths’ and set the correct type.

- Should be set consistently for every host with access to the LUN.

- Use ESXTOP to monitor traffic on each HBA to ensure the paths are working as expected.

Administering path policy (CLI)

In general the CLI is more useful than the VI client because it’s common to have multiple LUNs connected to multiple hosts (in an HA/DRS cluster for example). Rather than manually adjusting lots of paths the CLI can be scripted.

Changing a specific path policy (CLI)

Setting the path policy for a LUN, path or adaptor is very similar to viewing it;

esxcli nmp device setpolicy --device naa.xxx --psp VMW_PSP_RR

NOTE: esxcli doesn’t work as you’d expect with vi-fastpass. You’ll still need to specify –-server as discussed on this VMware community thread.

NOTE: With the release of vSphere 4.1.1 you can now set this using PowerCLI. See this post from Arnim Van Lieshout for details.

Viewing the multipathing policy (CLI)

To list all LUNs and see their multipathing policy;

esxcli –-server <myhost> ---username root –password <mypw> nmp device list

NOTE: You get a similar output from ‘vicfg-mpath –l’ but that doesn’t show the PSP in use or the working path.

To check a particular datastore to ensure its multipathing is correct;

vicfg-scsidevs --vmfs (to get the naa ID for the datastore in question); esxcli nmp device list –-device naa.60a98000486e5874644a625058724d70 (obtained from step 1)

Configuring the Round Robin load balancing algorithm

When you configure Round Robin there are a set of default parameters;

- Use non-optimal paths: NO

- IOps per path: 1000 (ie swap to another path after 1000 IOps)

Some vendors recommend changing the IOps value to 1 instead of 1000 which you can do like so;

esxcli nmp roundrobin setconfig --device naa.xxx

Food for thought – an interesting post from Duncan Epping about RR best practices.

Summary of useful commands

| esxcli corestorage claimrule | Claimrules to determine which MPP gets used. Not to be confused with the esxcli nmp satp claimrules which determine which SATP (within a given MPP) is used. Typically used with MASK_PATH. |

| esxcli nmp claimrule add | Add rules to determine which SATP (and PSP) is used for a given device/vendor/path |

| esxcli nmp device setpolicy | Configure the path policy for a given device, path, or adaptor |

| esxcli nmp satp setdefaultpsp | Configure the default pathing policy (such as MRU, RR or Fixed) for a SATP |

| esxcli nmp psp setconfig | getconfig | Specific configuration parameters for each path policy. Typically used with RR algorithm (IOps, or bytes) |

| esxcfg-scsidevs –vmfs [–device naa.xxx] | Easy way to match a VMFS datastore with it’s associated naa.xxx ID |

Software iSCSI port binding

Port binding is the process which enables multipathing for iSCSI, a feature new to vSphere. By creating multiple vmKernel ports you can bind each to a separate pNIC and then associate them with the software iSCSI initiator, creating multiple paths. Check out a good post here, more here, plus the usual Duncan Epping post. You can also watch this video from VMware.

If you want to understand the theory behind iSCSI, check this great multivendor post.

The iSCSI SAN Configuration Guide covers this in chapter 2 and the vSphere Command Line Interface Installation and Reference Guide briefly covers the syntax on page 90.

NOTE: This process can also be used for binding a hardware dependent iSCSI adaptor to its associated vmKernel ports. A hardware dependent iSCSI adaptor is simply a NIC with iSCSI offload capability as opposed to a fully-fledged iSCSI HBA.

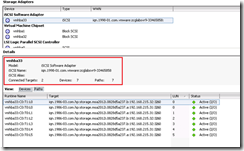

Process summary;

- Create multiple vmKernel ports

- Configure networking so that each vmKernel has a dedicated pNIC

- Bind each vmKernel port to the software iSCSI initiator

- Rescan storage to recognise new paths

Create multiple vmKernel ports

Simply create an additional vmKernel port (with a different IP on the same subnet as the other vmKernel ports). You can use separate vSwitches (which enforces separate pNICs) or use a single vSwitch and then use portgroups with explicit failover settings This is done for both vSS and dVS switches.

Configure networking to dedicate a pNIC to each vmKernel port

You can use separate vSwitches (which enforces separate pNICs) or use a single vSwitch and then use portgroups with explicit failover settings. Both methods work for standard and distributed switches.

Bind each vmKernel port to the software iSCSI initiator

This is the bit that’s unfamiliar to many, and it can only be done from the command line (locally or via RCLI/vMA);

esxcli swiscsi nic add -n vmk1 -d vmhba33 esxcli swiscsi nic add -n vmk2 -d vmhba33 esxcli swiscsi nic list (to confirm configuration)

NOTE: These configuration settings are persistent across reboots, and even if you disable and re-enable the SW iSCSI initiator.

Rescan storage

esxcfg-rescan vmhba33

2 thoughts on “VCAP-DCA Study Notes – 1.3 Complex Multipathing and PSA plugins”