The VCAP-DCA lab is still v4.0 (rather than v4.1) which means features such as NIOC and load based teaming (LBT) aren’t covered. Even though the Nexus 1000V isn’t on the Network objectives blueprint (just the vDS) it’s worth knowing what extra features it offers as some goals might require you to know when to use the Nexus1000V or just the vDS.

Knowledge

- Identify common virtual switch configurations

Skills and Abilities

- Determine use cases for and apply IPv6

- Configure NetQueue

- Configure SNMP

- Determine use cases for and apply VMware DirectPath I/O

- Migrate a vSS network to a Hybrid or Full vDS solution

- Configure vSS and vDS settings using command line tools

- Analyze command line output to identify vSS and vDS configuration details

Tools & learning resources

- Product Documentation

- vSphere Client

- vSphere CLI

- vicfg-*

- TA2525 – vSphere Networking Deep Dive (VMworld 2009 – free access)

- TA6862 – vDS Deep Dive – Managing and Troubleshooting (VMworld 2010, subscription required)

- TA8595 – Virtual Networking Concepts and Best Practices (VMworld 2010, subscription required)

- Design considerations for the vDS (Rich Brambley)

- Catch-22 for vds and vCentre (Jason Boche)

- Kendrick Coleman’s ‘How to setup the Nexus 1000V in ten minutes’ blogpost

Network basics (VCP revision)

Standard switches support the following features (see section 2.3 for more details);

- NIC teaming

- Based on source VM ID (default)

- Based on IP Hash (used with Etherchannel)

- Based on source MAC hash

- Explicit failover order

- VLANs (EST, VST, VGT)

vDS Revision

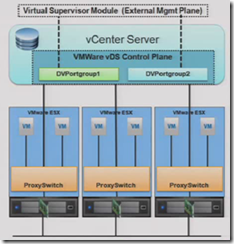

The vDistributed switch separates the control plane and the data place to enable centralised administration as well as extra functionality compared to standard vSwitches. A good summary can be found at GeekSilver’s blog. Benefits;

- Offers both inbound and outbound traffic shaping (standard switches only offer outbound)

- Traffic shaping can be applied at both dvPortGroup and dvUplink PortGroup level

- For dvUplink PortGroups ingress is traffic from external network coming into vDS, egress is traffic from vDS to external network

- For dvPortGroups ingress is traffic from VM coming into vDS, egress is traffic from vDS to VMs

- Configured via three policies – average bandwidth, burst rate, and peak bandwidth

- Ability to build a third party vDS on top (Cisco Nexus 1000v)

- Traffic statistics are available (unlike standard vSwitches)

NOTES:

- CDP and MTU are set per vDS (as they are with standard vSwitches).

- PVLANs are defined at switch level and applied at dvPortGroup level.

- There is one DVUplink Portgroup per vDS

- NIC teaming is configured at the dvPortGroup level but can be overridden at the dvPort level (by default this is disabled but it can be allowed). This applies to both dvUplink Portgroups and standard dvPortGroups although on an uplink you CANNOT override the NIC teaming or Security policies.

- Policy inheritance (lower level takes precedence but override is disabled by default)

- dvPortGroup -> dvPort

- dvUplink PortGroup -> dvUplinkPort

NOTE: Don’t create a vDS with special characters in the name (I used ‘Lab & Management’) as it breaks host profiles – see VMwareKB1034327.

Determine use cases for and apply IPv6

The use case for IPv6 is largely due to IPv4 running out of address space – it isn’t so much a VMware requirements as an Internet requirement. IPv6 is ‘supported’ on ESX/i, but there are a few features in vSphere which aren’t compatible;

- ESX during installation – you have to install on an IPv4 network

- VMware HA

- VMware Fault Tolerance

- RCLI

Enabling IPv6 is easily done via the VI client, Configuration -> Networking, click Properties and reboot host. You can enable IPv6 without actually configuring any interfaces (SC, vmKernel etc) with an IPv6 address.

See VMwareKB1010812 for details of using command line to enable IPv6 and read this blogpost by Eric Siebert on IPv6 support.

Netqueue

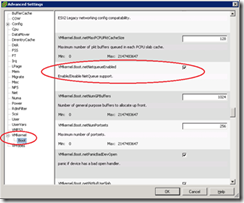

NetQueue (which was present in ESX 3.5 but is improved in vSphere) is a feature which improves network performance when sending or receiving large amounts of traffic to an ESX host, typically used with 10GB Ethernet. Without Netqueue it is normally impossible to achieve full 10GB throughput (read more in this Dell whitepaper). It does this by processing multiple queues in parallel, using multiple CPUs.

- Enabled by default

- Requires support from the pNIC

- More beneficial with NUMA architectures

- Enabled/disabled by;

- Via host’s Configuration -> Advanced Settings > vmKernel

- esxcfg-advcfg –set-kernel 1 netNetqueueEnabled (to enable)

esxcfg-advcfg –set-kernel 0 netNetqueueEnabled (to disable)

As well as enabling the host functionality you may need to configure the NIC driver with vendor specific settings, typically using esxcfg-module. See VMwareKB1004278for details of enabling Netqueue for a specific 10GB NIC. In the real world it seems as if Netqueue performance is dependent on good http://premier-pharmacy.com/product-category/sleeping-aids/ driver online pharmacy reviews support – as this article at AnandTech points out some drivers aren’t much of an improvement over 1GB.

TA2525 – vSphere Networking Deep Dive covers this in detail around the 1hr 15 mark.

Configure SNMP

SNMP can be used to enhance management, typically by providing information either on request (polling) or when events are triggered (trap notification). Sending an SNMP trap is one of the standard alarm actions in vCenter.

Two network ports used;

- 161/udp – used to receive poll requests

- 162/udp – used to send trap notifications

Configuring SNMP for vCenter

- Go to Administration -> vCenter Settings -> SNMP

- vCenter can only send notification traps, it doesn’t support poll requests.

Configuring SNMP for ESX/i hosts

- Use vicfg-snmp (RCLI) or directly edit configuration files on the ESX/i hosts

NOTE: There is NO esxcfg-snmp, and there is no GUI option for configuring hosts.

To check the current configuration;

vicfg-snmp --show vicfg-snmp --targets <SNMP Receiver>/community vicfg-snmp --test

Misc;

- By default SNMP is disabled with no targets defined

- ESX has both the Net-SNMP agent and a VMware hostd agent. ESXi only has the VMware agent. VMware specific information is only available from the embedded (hostd) agent.

- Using vicfg-snmp configures the VMware SNMP agent (ie it modifies /etc/vmware/snmp.xml). It does NOT configure the Net-SNMP agent.

- When you configure SNMP using vicfg-snmp the relevant network ports are automatically opened on the firewall. If you edit the config files directly you’ll need to do this yourself (esxcfg-firewall -o 162,udp,outgoing,snmpd for notification traps).

See VMwareKB1022879 for details of editing configuration files or watch this video to see how to use vicfg-snmp – Eric Sloof’s How to configure SNMP. The vSphere Command?Line Interface Installation and Scripting Guide p.40 covers vicfg-snmp while the Basic System Administration guide covers it in more depth (page 50-65).

Configuring the SNMP management server

You should load the VMware MIBs on the server receiving the traps you’ve configured. These MIBs can be downloaded from VMware on the vSphere download page. Follow instructions for your particular product to load the MIBs.

Determine use cases for and apply VMware DirectPath I/O

This was covered in section 1.1. Obviously it can be used with 10GB NICs if high network throughput is required.

Migrate a vSS network to a hybrid of full vDS solution

Make sure you read the VMware whitepaper and experiment with migrating a vSS to a vDS. There is some discussion about whether to use hybrid solutions (both vSS and vDS) or not – see this blogpost by Duncan Epping for some background information.

Determining the best deployment method for a dvS – see VMware white paper but roughly;

- If new hosts or no running VMs – use host profiles

- If migrating existing hosts or running VMs – use dvS GUI and ‘Migrate VM Networking’.

There are some catch-22 situations with a vDS which you may run into when migrating from a vSS;

- When vCenter is virtual – if you lose vCenter then you can’t manage the vDS so you can’t get a new vCenter on the network. See Jason Boche’s thoughts on the subject.

- If an ESXi host loses its management network connection you may not be able to reconfigure it using the command line (esxcfg-vswitch is limited with vDS operations). An alternative solution is to ‘Restore Standard Switch’ from the DCUI.NOTE: The option above doesn’t configure a VLAN tag so if you’re using VLANs you’ll need to reconfigure the management network after resetting to a standard switch.

- If you limited pNICs you may also run into problems like Joep Piscaer’s blogpost

I’m not sure about migrating templates to a vDS. Something to investigate….

Command line configuration for vSS and vDS

Commands for configuring vSS

- esxcfg-nics -l

- esxcfg-vswitch

- esxcfg-vmknic

- esxcfg-vswif

- esxcfg-route

Typical command lines;

esxcfg-vswitch -a vSwitch2 esxcfg-vswitch -L vmnic2 vSwitch2 esxcfg-vswitch -A NewServiceConsole vSwitch2 esxcfg-vswif -a -i 192.168.0.10 -n 255.255.255. 0 vswif2

NOTE: The above commands create a new standard vSwitch, portgroup and Service Console – typically used to recover from some vDS scenarios.

Commands for configuring a vDS

There are very few commands for configuring a vDS and what there is I’ve covered in section 2.4 which is dedicated to the vDS. See VMwareKB1008127 (configuring vDS from the command line) and it’s worth watching the following session from VMworld 2010 (although you’ll need a current subscription) – session TA6862 vDS Deep dive – Management and Troubleshooting.

Analyze command line output to identify vSS and vDS configuration details

Things to look out for above and beyond the basic vSwitch, uplink and portgroup information;

- MTU

- CDP

- VLAN configuration

See section 6.3, Troubleshooting Network Connectivity for more information.