Managing storage capacity is another potentially huge topic, even for a midsized company. The storage management functionality within vSphere is fairly comprehensive and a significant improvement over VI3.

Knowledge

- Identify storage provisioning methods

- Identify available storage monitoring tools, metrics and alarms

Skills and Abilities

- Apply space utilization data to manage storage resources

- Provision and manage storage resources according to Virtual Machine requirements

- Understand interactions between virtual storage provisioning and physical storage provisioning

- Apply VMware storage best practices

- Configure datastore alarms

- Analyze datastore alarms and errors to determine space availability

Tools & learning resources

- vSphere Datacenter Administration Guide

- Fibre Channel SAN Configuration Guide

- iSCSI SAN Configuration Guide

- vSphere Command?Line Interface Installation and Scripting Guide

- vmkfstools

- Marc Polo’s study guide notes

Storage provisioning methods

There are three main protocols you can use to provision storage;

- Fibre channel

- Block protocol

- Uses multipathing (PSA framework)

- Configured via vicfg-mpath, vicfg-scsidevs

- iSCSI

- block protocol

- Uses multipathing (PSA framework)

- hardware or software (boot from SAN is h/w initiator only)

- configured via vicfg-iscsi, esxcfg-swiscsi and esxcfg-hwiscsi, vicfg-mpath, esxcli

- NFS

- File level (not block)

- No multipathing (uses underlying Ethernet network resilience)

- Thin by default

- no RDM, MSCS,

- configured via vicfg-nas

I won’t go into much detail on each, just make sure you’re happy provisioning storage for each protocol both in the VI client and the CLI.

Know the various options for provisioning storage;

- VI client. Can be used to create/extend/delete all types of storage. VMFS volumes created via the VI client are automatically aligned.

- CLI – vmkfstools.

- NOTE: When creating a VMFS datastore via CLI you need to align it. Check VMFS alignment using ‘fdisk –lu’. Read more in Duncan Epping’s blogpost.

- PowerCLI. Managing storage with PowerCLI – VMwareKB1028368

- Vendor plugins (Netapp RCU for example). I’m not going to cover this here as I doubt the VCAP-DCA exam environment will include (or assume any knowledge of) these!

When provisioning storage there are various considerations;

- Thin vs thick

- Extents vs true extension

- Local vs FC/iSCSI vs NFS

- VMFS vs RDM

Using vmkfstools

Create a 10GB VMDK (defaults to zeroedthick with a BUSLOGIC adapter;

vmkfstools –c 10GB <path to VMDK>

Create a 10GB VMDK in eagerzeroedthick format with an LSILOGIC adapter;

vmkfstools -c 10g -d eagerzeroedthick –a lsilogic /vmfs/volumes/zcglabsvr7local/test.vmdk

Extend the virtual disk by 10GB;

vmkfstools –X 10g <path to VMDK>

Inflate the virtual disk, defaults to eagerzeroedthick. (thin to thick provisioning)

vmkfstools -i <path to VMDK>

Create an RDM in virtual compatibility mode

vmkfstools –r /vmfs/devices/disks/naa.600c0ff000d5c3830473904c01000000 myrdm.vmdk

Create an RDM in physical compatibility mode;

vmkfstools –z /vmfs/devices/disks/naa.600c0ff000d5c3830473904c01000000 myrdmp.vmdk

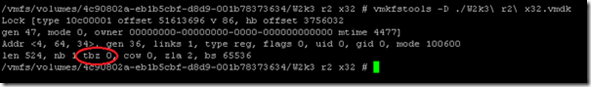

Check the format of a VMDK to determine if it’s eagerzeroedthick (required for FT and MS clustering). If the ‘tbz’ value is zero the disk is eagerzeroedthick. You can read a full description for this process in VMwareKB1011170

vmkfstools –D <path to VMDK>

A very useful VMwareKB article about vmkfstools

Storage monitoring tools, metrics and alarms

Native storage monitoring GUI tools include datastore alarms, the ‘Storage Views’ plugin (new to vSphere), vCenter performance charts and the datastore inventory view.

- The datastore view lets you see which hosts have a given datastore mounted along with free space, track events and tasks per datastore (for auditing for example), along with permissions per datastore.

- The Storage Views tab shows more detailed information about capacity, pathing status, storage maps, snapshot sizes (very useful) etc

- vCenter performance charts help you analyse bandwidth, latency, IOps and more. Using vCenter Charts is covered in section 3.4, Perform Capacity Planning in a vSphere environment.

- NOTE: Most array vendors provide tools which are far more sophisticated than the native vSphere tools for monitoring capacity, performance and vendor specific functionality (such as dedupe) although they’re rarely free!

Creating datastore alarms

When creating an alarm you can monitor either ‘events’ or ‘condition changes’ on the object in question, in this case datastores.

When configured for event monitoring you can monitor;

- VMFS created/extending/deleting a new datastore

- NAS created/extending/deleting a new datastore

- File or directory copied/deleted/moved

When configured for ‘state’ changes you can monitor;

- Disk % full

- Disk % over allocated

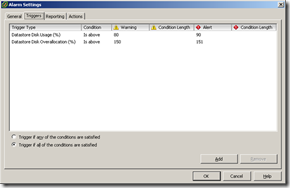

- Datastore to All Hosts

While these seem straightforward there are complications. If you’re using NFS and array level deduplication for example the ‘savings’ from dedupe will be reflected in vCenter. If you set an alarm only to monitor ‘%Disk full’ then you may find http://www.eta-i.org/xanax.html you’ve massively overprovisioned the volume (NFS is also thin provisioned by default) before you reach a capacity alert. The solution is to monitor both ‘%Disk overallocation’ and ‘%Disk full’ and only generate an alert if both conditions are met.

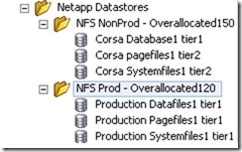

A solution I’ve implemented previously is to create multiple ‘service levels’ by grouping your datastores into separate folders and then setting different alarms on each folder. For instance I want an alert if a production datastore is overprovision by more than 120% but I’m happy for this threshold to be 150% for nonprod VMs;

There are also storage capacity related alarms on the VM objects;

- VM Total size on disk

- VM snapshot size

- VM Disk usage (kbps)

Understand interactions between virtual storage and physical storage

Wide open topic, which will also vary depending on the storage vendor. Always remember that a ‘simple’ change can have unexpected implications;

- Increasing capacity (extending a LUN using an extent) could improve (or decrease) performance depending on the underlying storage array (for example the new LUN is on few slow spindles). Your requirement to increase capacity might also impact availability – if a second LUN is provisioned and added as an extent you now have two LUNs to manage (and a failure of either means the datastore goes offline).

- Consider queues – disk queues, HBA queues, LUN queues etc. Read more.

- Consider SCSI reservations (number of VMs per VMFS). VMwareKB1005009, see section 6.4 for information about troubleshooting SCSI reservations. Read more here.

- If you run a disk defragment (or a full disk format) in the guest OS it will inflate the underlying thin provisioned VMDK to maximum size.

- Thin or thick may have performance implications – if the disk is eagerzeroedthick then the blocks in the underlying storage needs to be zeroed out, resulting in provisioning taking longer. NOTE: There is very little performance impact from using thin disks under typical circumstances. (VMware whitepaper on thin provisioning performance).

- Doing a vMotion can’t impact storage right,? The VMDK’s are shared and don’t move. But what if you’re virtual swap files are on local disk? Ah….

- Creating one VM in the datastore won’t necessarily consume the same amount of storage on the underlying array and might impact DR– dedupe, snap mirror, snap reserve all impacted

- VAAI – vSphere 4.1 has introduced ‘array offloading’ which affects interaction between virtual and physical storage, but today the VCAP-DCA lab is built on vSphere 4.0.

- You can change disk format while doing a svMotion (from thick to thin for example). These operations have an impact on your storage array so consider doing mass migrations out of hours (or whenever your array is less busy).

Apply VMware Storage best practices

Provision and manage storage resources according to Virtual Machine requirements

Section 6.4 (Troubleshooting Storage) is where the blueprint lists ‘recall vSphere maximums’ but it makes more sense to cover them here as they impact storage capacity planning. The relevant limits;

- 255 LUNs per host

- 32 paths per LUN

- 1024 paths per host

- 256 VMs per VMFS volume

- 2TB -512 bytes max per VMFS extent

- 32 extents per VMFS (for a max 64TB volume)

- 8 NFS datastores (default, can be increased to 64)

- 8 HBAs per host

- 16 HBA ports per host

Reference the full list; vSphere Configuration Maximums

- When sizing VMFS volume ensure you account for snapshots, VM swapfiles etc as well as VMDK disk sizes.

- When sizing LUNs there are two primary options;

- Predictive sizing. Understand the IO requirements of your apps first, then create multiple VMFS volumes with different storage characteristics. Place VMs in the appropriate LUN.

- Adaptive sizing. Create a few large LUNs and start placing VMs in them. Check performance and create new LUNs when performance is impacted.

- Also consider that different VM workloads may need different performance characteristics (ie underlying RAID levels) which can affect LUN size and layout.

- Use disk shares (and SIOC with vSphere 4.1) to control storage contention. With disk shares the contention is regulated per host, with SIOC it’s regulated per cluster.

2 thoughts on “VCAP-DCA Study notes – 1.2 Manage Storage Capacity”