Knowledge

- Explain DRS affinity and anti?affinity rules

- Identify required hardware components to support DPM

- Identify EVC requirements, baselines and components

- Understand the DRS slot?size algorithm and its impact on migration recommendations

Skills and Abilities

- Properly configure BIOS and management settings to support DPM

- Test DPM to verify proper configuration

- Configure appropriate DPM Threshold to meet business requirements

- Configure EVC using appropriate baseline

- Change the EVC mode on an existing DRS cluster

- Create DRS and DPM alarms

- Configure applicable power management settings for ESX Hosts

- Properly size virtual machines and clusters for optimal DRS efficiency

- Properly apply virtual machine automation levels based upon application requirements

Tools & learning resources

- Product Documentation

- vSphere Resource Management Guide

- vSphere Datacenter Administration Guide (not listed in blueprint, but details EVC)

- vSphere Client

- DRS Resource Distribution Chart

- Frank Denneman’s blogpost on using DPM with DRS

- Jason Boche’s blogpost about DPM UI consistency

- Duncan Epping and Frank Denneman’s HA and DRS book

- DRS limitations with vSMP

- Fine tuning the DRS algorithm

- The math behind the DRS algorithm

- Community blogpost – good log files for troubleshooting EVC

- Frank Denneman’s excellent post on VM-Host affinity rules

Advanced DRS

- Read the DRS deepdive at Yellow Bricks.

- Use the (new to vSphere) DRS Faults and DRS History tabs to investigate issues with DRS

- By default DRS recalculates every 5 minutes (including DPM recommendations), but it also does so when resource settings are changed (reservations, adding/removing hosts etc).For a full list of actions which trigger DRS calculations see Frank Denneman’s HA/DRS book.

- It’s perfectly possible to turn on DRS even though all prerequisite functionality isn’t enabled – for example if vMotion isn’t enabled you won’t be prompted (at least until you try to migrate a VM)!

Affinity and anti-affinity rules

There are two types of affinity/anti-affinity rules;

- VM-VM (new in vSphere v4.0)

- VM-Host (new to vSphere 4.1)

The VM-VM affinity is pretty straightforward. Simply select a group of two or more VMs and decide if they should be kept together (affinity) or apart (anti-affinity). Typical use cases;

- Webservers acting in a web farm (set anti-affinity to keep them on separate hosts for redundancy)

- A webserver and associated application server (set affinity to optimise networking by keeping them on the same host)

VM-Host affinity is a new feature (with vSphere 4.1) which lets you ‘pin’ one or more VMs to a particular host or group of hosts. Use cases I can think of;

- Pin the vCenter server to a couple of known hosts in a large cluster

- Pin VMs for licence compliance (think Oracle, although apparently they don’t recognise this new feature as being valid – see the comments in this post)

- Microsoft clustering (see section 4.3 for more details on how to configure this)

- Multi-tenancy (cloud infrastructures)

- Blade environments (ensure VMs run on different chassis in case of backplane failure)

- Stretched clusters (spread between sites. See this Netapp post for Metrocluster details)

To implement them;

- Define ‘pools’ of hosts.

- Define ‘pools’ of VMs.

- Create a rule pairing one VM group with one host group.

- Specify either affinity (keep together) or anti-affinity (keep apart).

- Specify either ‘should’ or ‘must’ (preference or mandatory)

This is configured in the ‘DRS Groups Manager’ and ‘Rules’ pages of the DRS Properties;

NOTE: The ‘Rules’ tab was available in v4.0, but the DRS Groups Manager tab is new in v4.1. In v4.0 you could set VM-VM rules, it’s only the VM-Host rules which are new.

NOTE: You only need vCenter v4.1 to get the new Host-VM affinity functionality – the hosts themselves can still be running v4.0.

Properly size VMs and clusters for optimal DRS efficiency

Use reservations sparingly – impacts slot size (see section 4.1 Complex HA for details of slot size algorithm).

VM-Host affinity rules should be used with caution and sparingly, especially the mandatory rules. HA, DRS, and DPM are all aware of and bound by these rules which could impact their efficiency. VM-VM affinity rules are less of an issue because a) HA ignores them and b)they don’t include hosts in their rules (and DRS load balancing is done amongst hosts). Operations which can be blocked by a VM-Host rule include;

- Putting a host into maintenance mode

- Putting a host into standby mode (DPM)

Size VMs appropriately – oversizing VMs can affect both slot size (if using reservations), free resources and the DRS load balancing algorithm.

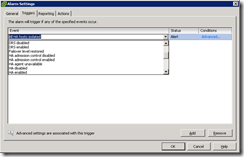

DRS Alarms

There are no alarms predefined for DRS but there are plenty you can define;

I’d have liked a way to get an email notification when a DRS or DPM recommendation was created (in Manual or Partial automation mode) but this doesn’t seem possible.

DRS vs DPM automation level

As pointed out by Jason Boche, the automation level sliders are inconsistent between DRS and DPM. This http://premier-pharmacy.com/product/cymbalta/ stems from a difference in the way recommendation are done for the two functions;

- For DRS the highest recommendation is rated 1, lowest is 5.Prior to vSphere 4.1 this was the opposite and used starts instead (ie a 5 star recommendation was the highest)

- For DPM the highest recommendation is rated 1, lowest is 5

Be careful when setting these that you’re setting is as you planned!

Distributed Power Management (DPM)

BIOS requirements

- Ensure WOL is enabled for the NICs (it was disabled by default on my DL380G5)

- Configure the IPMI/iLO

Hardware requirements

Three protocols for using DPM depending on your hardware features/support;

- WOL is supported (or not) by the network cards (although it also requires motherboard support). It allows a server to be ‘woken’ up by sending a ‘magic’ packet to the NIC, even when the server is powered off. See VMwareKB1003373 for details. Largely unrelated tip – how to send a ‘magic packet’ using Powershell!

- IPMI.

- iLO/DRAC cards

If a server supports multiple protocols they are used in this order: IPMI, iLO, WOL.

NOTE: Unfortunately you can’t use DPM with virtual ESX hosts as the E1000 driver (which is used with vESX) doesn’t support WOL functionality. This feature joins FT as a lab breaker! See this post from vinf.net.

Configuring DPM

You’ll need Advanced licencing or higher to get DPM.

For WOL

- you must use the vMotion (or VMkernel) port (so this must support WOL)

- check the port speed is set to ‘auto’ on the physical switch

- test that a host ‘wakes’ correctly from standby before enabling DPM.

Configuring IPMI and ILO/DRAC requires extra steps, done via Configuration -> Software -> Power Management. You need to provide;

- Credentials (vCenter uses MD5 if the BMC supports it else falls back to plaintext)

- the IP address of the IPMI/ILO card

- the MAC address of the IPMI/ILO card

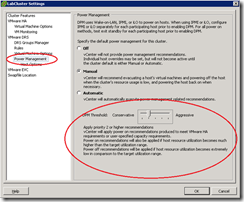

Enabling DPM

- Test each host individually to ensure it’s able to ‘wake’ from Standby mode. Set DPM to disabled for any hosts that don’t support the wakeup protocols.

- Enable DPM on the cluster

- Set the DPM threshold

- Priority-one recommendations are the biggest improvement and priority-five the least. This is the opposite of the e similar looking DRS thresholds – see Jason Boche’s blogpost about DPM UI consistency for details.

- Disable power management for any hosts in the cluster which don’t support the above protocols

Using alarms with DPM

- Define the default alarm for ‘Exit standby error’ so you can manually intervene if a host fails to return from standby.

- Using DPM means hosts will go offline frequently which makes monitoring host availability difficult as you can’t distinguish a genuine outage from a DPM initialised power down.

- Optionally you can create alarms for the following events;

- Entering standby mode

- Exiting standby mode

- Successfully entered standby mode

- Successfully exited standby mode

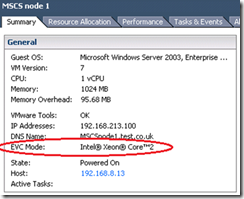

Enhanced vMotion Compatibility (EVC)

EVC increases vMotion compatibility by masking off CPU features which aren’t consistent across the cluster. It’s enabled at cluster level and is disabled by default.

NOTE: EVC does NOT stop VMs from using faster CPU speeds or hardware virtualisation features that might be available on some hosts in the cluster.

NOTE: EVC is required for FT to work with DRS.

Requirements

- All hosts in the cluster must have CPU’s from the same vendor (Intel or AMD)

- All hosts must have vMotion enabled (if not who cares about CPU compatibility?)

- Hardware virtualisation must be enabled in the BIOS (if present). This is because EVC runs a check to ensure the processor has the features it thinks should be present in that model of CPU.

NOTE: This includes having the ‘No Execute’ bit enabled.

Configuring a new cluster for EVC

- Determine which baseline to use based on the CPUs in your hosts (check the Datacenter Administration Guide chapter 18 for a compatibility table if you don’t know, or the more in-depth VMwareKB1003212 although this won’t be available during the exam)

- Configure EVC on the cluster (prior to adding any hosts)

- If the host has newer CPU features (compared to your EVC baseline) power off all VMs on the host.

- Add hosts to the cluster.

Changing the EVC level on an existing cluster

- You can change EVC to a higher baseline with no impact. VMs will not benefit from new CPU features until each VM has been power cycled (a reboot isn’t sufficient).

- When downgrading the EVC baseline you need to power off (or vMotion out of the cluster) all running VMs

- An alternative approach is to create a new cluster, enable the correct EVC mode, and then move the hosts from the old cluster to the new cluster one at a time.

Excellent prep post. Thanks for including me!